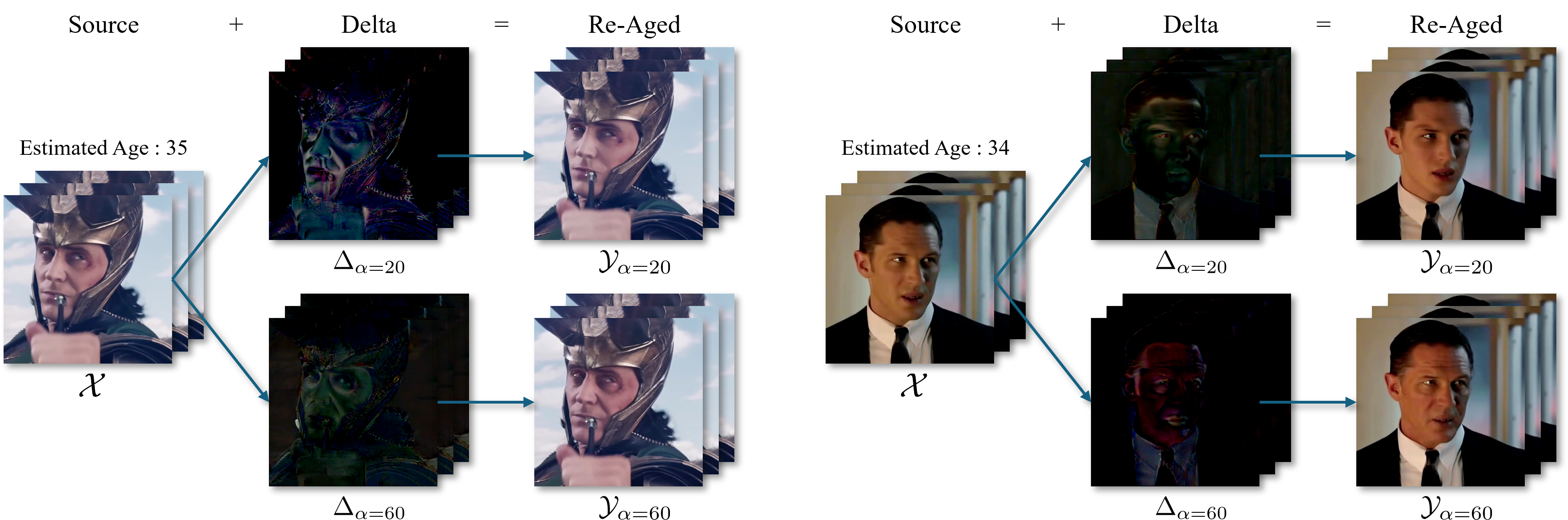

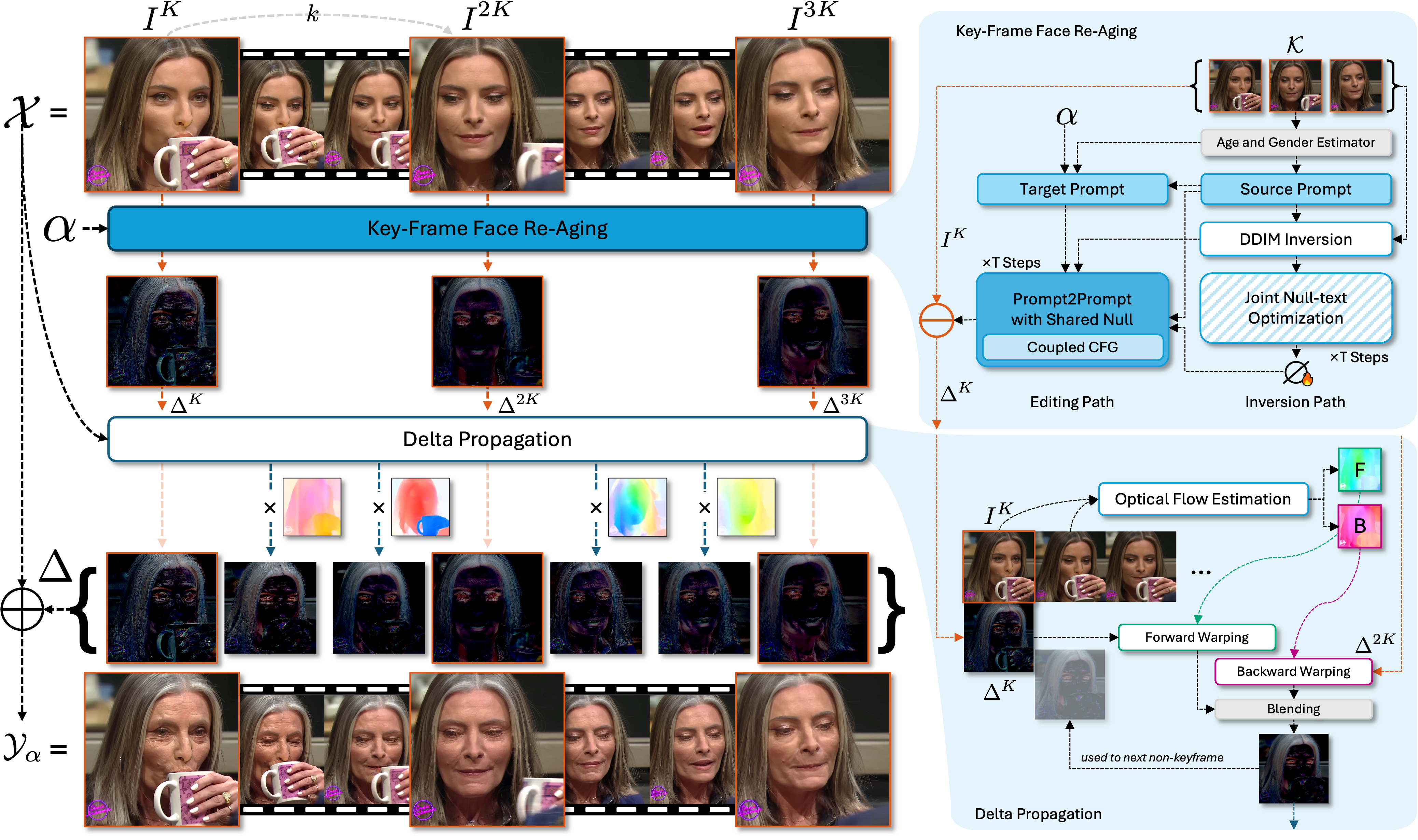

Machine learning-based face re-aging enables to automatically change age-related attributes given a target age, starkly reducing the need of manual labor among experienced artists. Variants of StyleGAN or diffusion models exhibited promising outcomes, but such approaches often lead to low fidelity on in-the-wild samples or still remain on image space. Thus, those video-level technics has lagged behind, despite video inference is imperative for practical aspects. To this end, we introduce diffusion-based re-aging models, marking the first attempt to incorporate diffusion scheme on video face re-aging task. Our optimization-based denoising approach can generate faithful re-aging results on various conditions, surpassing the shortcoming of GAN and VAE. Concretely, to improve global semantic coherence, joint null-text optimization is proposed where a single embedding is learned to coverage entire scene using keyframes. In addition, we leverage delta map which significantly amplifies image fidelity via residual manner. Thanks to the nature of delta, our system can lift the 2D diffusion models to video editing by neglecting age-irrelavant region and by propagating age-relavant pixels to adjacent frames through estimated optical flow without costly computing power. Our VideoTimeTravel ensures high fidelity, achieving unprecedented generalizable capability on in-the-wild cases such as occlusion, accessories and also satisfying industrial demand such as movie trailer, CGI.

Original

Ours

RAVE

CVPR 2024

VidToMe

CVPR 2024

BIVDiff

CVPR 2024

TokenFlow

ICLR 2024

Rerender-A-Video

Siggraph Asia 2023

Fate/Zero

ICCV 2023

Text2Video-Zero

ICCV 2023

Pix2Video

ICCV 2023

Original

Ours

STIT

Siggraph Asia 2022

StyleGANEX

ICCV 2023

DiffusionVAE

CVPR 2023

VIVE3D

CVPR 2023